Interactive artificial intelligence (AI) agents have become quite popular in recent years. Businesses are increasingly adopting them in various forms to reduce employee strain and enhance the customer experience. The excitement over AI is understandable, and we at Alacriti have been on the forefront of these technologies so we can pass the benefits onto our customers.

AI is a big part of our focus here at Alacriti. We’re using the technology to help our customers automate manual processes and reach end-users via channels that didn’t exist just a few years ago. Ella – our AI-powered conversational bot that enables bill payments via Facebook Messenger, Amazon’s Alexa, and Google Home – is an example of this. In this post, we’ll go through some of what we’ve been doing to enable these AI agents to learn on their own.

While a pre-trained AI agent does a decent job at understanding and performing actions based on user inputs, it’s better to have an AI agent that continues to learn and understand its users better over time. One may ask how a bot can learn and improve. Well, how do humans learn and improve? We learn and improve when we realize something we’ve been doing isn’t working well and adapt ourselves to do better, based on those observations. A bot does the same thing!

The first thing a bot needs to do to be able to improve itself is identify areas it has not been performing well. The bot can then “consider” what it should have done instead, just like us humans. There are three aspects in which a bot can “learn” to do better:

- Intent Classification: Understanding what a user meant.

- Contextual Understanding: Taking an appropriate action based on the context off what was said.

- Entity Extraction: Extracting the parameters required to fulfill an intent. For example, extracting money from an account when a user asks to make a transfer.

In this post, we’ll focus on intent classification.

What is Intent Classification?

Simply put, intent classification is what a user means when she says something for the bot to take an action, or the process of understanding and classifying the user input into an intent. For example, if someone says, “Get my bill”, the intent is billDetails. If she says “I’d like to make a payment of $100”, the intent is payBill. So how do bots get better at this? Let’s dig in.

Any user input – whether it’s through Facebook Messenger, Amazon Echo, or any other platform, is forwarded to our bot. The bot uses a Natural Language Understanding (NLU) engine for intent classification and entity extraction. The bot takes an appropriate action based on the intent and the confidence score returned by the NLU engine. It is the NLU engine that must learn and improve to be able to understand the user better. The engine is initially trained with only a few sample user inputs and their corresponding intent labels. For Intent Classification to improve, we need to identify areas where the NLU engine has been performing poorly and correctly label those user inputs.

Obtaining Labelled User Inputs

It’s possible to have someone correctly label user inputs where the NLU engine has been performing poorly. However, that would be expensive and time consuming, as the bot would require continuous manual training. One way to avoid this is to have the bot ask users to clarify what they meant whenever the intent classification score falls below a certain threshold. The bot could then use these labeled user inputs to improve the NLU model. This might not always work, however, because users could label inputs incorrectly and possibly deteriorate the model.

One way to solve this problem is by running the user-labelled inputs through a clustering algorithm to see if similar inputs were tagged with the same intent. These user inputs would only be used to retrain the NLU model once there is sufficient affirmation. Below is a sample conversation to demonstrate how this would work. For the example, let’s assume the threshold score to be 0.7.

User: Hey

Bot (Detects greetUser intent. Score: 1.0): Hello, Michael. How can I help you?

User: I’d like to know my due amount.

Bot (Detects billDetails intent. Score: 0.95): You owe $138.75. This payment is due on Feb 21st, 2018. Would you like to make a payment?

User: I’d like to enable automated payments for my account.

Bot (Detects setupAutoPay intent. Score: 0.65): Did you mean 1. Setup AutoPay or 2. Make a payment?

User selects 1. Setup AutoPay.

Bot: Sure, I can do that. How much amount would you like to pay? 1. Current balance 2. Minimum balance 3. Current statement balance 4. Custom amount

User selects 2. Minimum balance.

Bot: Would you like to add a payment limit?

User: No.

Bot (Detects reject intent. Score: 1.0): Ok, I’m setting up AutoPay for your account with no payment limit from your default funding account with the minimum balance as the payment amount. Shall I proceed?

User: Yes. Go ahead.

Bot (Detects accept intent. Score: 0.95): Autopay is successfully set up. Can I help you with anything else?

User: No, that’s it. Thank you.

Bot (Detects gratitude intent. Score: 0.9): You’re welcome. Have a good day, Michael.

At the end of the conversation, we’d have the user-labelled input shown below. The new input won’t be used to retrain the NLU model directly, but it would be stored and compared against similar tags later for affirmation.

Input: I’d like to enable automated payments for my account.

Intent Label: setupAutoPay

Unsupervised Learning for Identifying Training Sentences

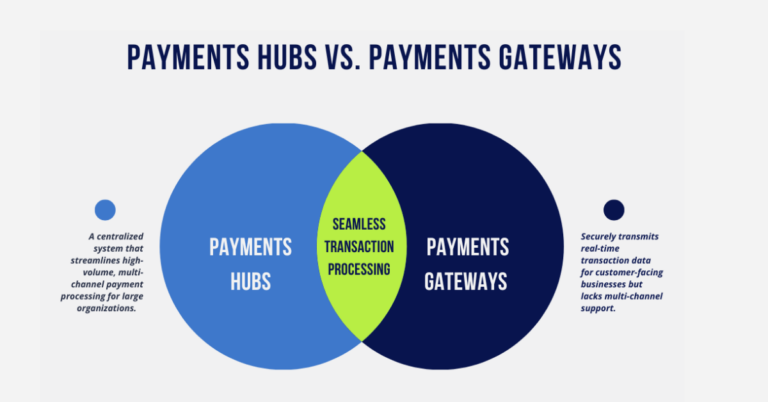

Assuming we now have tons of user-labelled inputs in our database, how can we identify genuine inputs? To do this, we’ll need to generate sentence vectors and use a machine learning approach called clustering. Clustering is an unsupervised learning technique that groups similar data points together.

There are two ways to generate sentence vectors: Tf-idf and Word2vec. Since we need to identify and group inputs similar in syntax, Tf-idf is more suitable as it solely focuses on syntax and not on meaning. Using Tf-idf, we can generate sentence vectors for all the untrained user inputs in our database. A sentence like “I’d like to transfer money” would look something like this after Tf-idf vectorization: [0.14 0.26 0.21 0.02 0.10 0.08 0.13 0.07].

Once sentence vectors are generated, it’s time to perform a clustering operation over the data. We use K-means algorithm for this, the most popular clustering algorithm. It’s simple, efficient, and well-suited for our needs. It does, however, assume prior knowledge of the data to choose an appropriate value for K, the number of clusters.

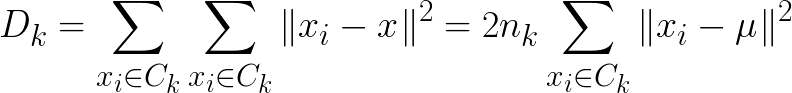

To find K, we need a metric to measure the compactness of the clusters. Once we have that, we can figure out the optimal number of clusters – K – for the available data. Between points in a given cluster C_k containing n_k points, the following metric represents the sum of intra-cluster distances:

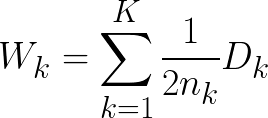

The normalized intra-cluster sums of squares are calculated as follows to measure the compactness of clustering:

This quantity, Wk can then be used in a manual technique known as the Elbow Method to identify the optimal K value. The graph of Wk vs. K would take a sharp turn (like an elbow) indicating the optimal number of clusters, K. But we cannot use this for two reasons – because it needs human input, and it’s difficult to spot the “elbow” most of the time.

The Gap Statistic method is an approach that doesn’t depend on human input. The idea behind this approach is to find a way to standardize the comparison of log W_k with a null reference distribution of the data, i.e. a distribution with no obvious clustering. The estimate for the optimal number of clusters K would be the value for which log W_k falls the farthest below this reference curve. Here’s the formula:

![]()

From the sentence clusters obtained with this optimal value of K, we pick one user input per cluster at random to retrain our NLU model.

We must then identify clusters with a dominant intent label and pick one sentence (with the same label) from each to be used as a training sentence for that intent. An intent label is considered dominant if at least two-thirds of the inputs in a cluster are labeled with it. To avoid manipulation, we need to ensure there is diversity among user inputs and users who have labelled sentences, per cluster. We do this by setting a minimum number of user inputs per cluster and ensuring there are enough distinct users who have labelled sentences in each cluster. The training sentences which meet these requirements are then used to retrain the NLU model.

We now have an AI agent that can learn on its own and get better at intent classification without any manual intervention. In our follow up post, we’ll discuss how to measure the NLU engine’s intent classification performance in a fully automated way using user feedback. This is essential to track the improvement or degradation of the NLU engine. Be sure to check back to our blog as we dive deeper into machine learning and how it all works!